Test-Driven Development: A Review

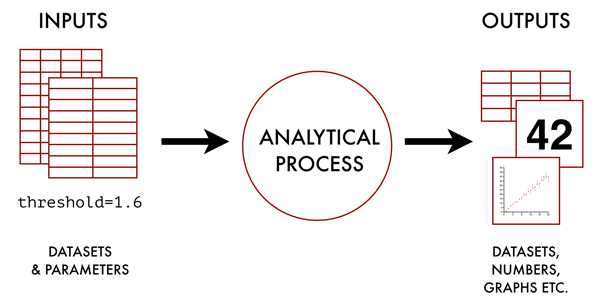

Since a key motivation for developing test-driven data analysis (TDDA) has been test-driven development (TDD), we need to conduct a lightning tour of TDD before outlining how we see TDDA developing. If you are already familiar with test-driven development, this may not contain too much that is new for you, though we will present it with half an eye to the repurposing of it that we plan as we move towards test-driven data analysis.

Test-driven development (TDD) has gained notable popularity as an approach to software engineering, both in its own right and as a key component of the Agile development methodology. Its benefits, as articulated by its adherents, include higher software quality, greater development speed, improved flexibility during development (i.e., more ability to adjust course during development), earlier detection of bugs and regressions1 and an increased ability to restructure ("refactor") code.

The Core Idea of Test-Driven Development

Automation + specification + verification + refactoring

The central idea in test-driven development is that of using a comprehensive suite of automated tests to specify the desired behaviour of a program and to verify that it is working correctly. The goal is to have enough, sufficiently detailed tests to ensure that when they all pass we feel genuine confidence that the system is functioning correctly.

The canonical test-driven approach to software development consists of the following stages:

-

First, a suite of tests is written specifying the correct behaviour of a software system. As a trivial example, if we are implementing a function,

f, to compute the sum of two inputs,aandb, we might specify a set of correct input-output pairs. In TDD, we structure our tests as a series of assertions, each of which is a statement that must be satisfied in order for the test to pass. In this case, some possible assertions, expressed in pseudo-code, would be:assert f( 0, 0) = 0 assert f( 1, 7) = 8 assert f(-2, 17) = 15 assert f(-3, +3) = 0Importantly, the tests should also, in general, check and specify the generation of errors and the handling of so-called edge cases. Edge cases are atypical but valid cases, which might include extreme input values, handling of null values and handling of empty datasets. For example:

assert f("a", 7) --> TypeError assert f(MAX_FLOAT, MAX_FLOAT) = Infinity

NOTE This is not a comprehensive set of tests for

f. We'll talk more about what might be considered adequate for this function in later posts. The purpose of this example is simply to show the general structure of typical tests.

-

An important aspect of testing frameworks is that they allow tests to take the form of executable code that can be run even before the functionality under test has been written. At this stage, since we have not even defined

f, we expect the tests not to pass, but to produce errors such as"No such function: f". Once a minimal definition forfhas been provided, such as one that always returns 0, or that returns no result, the errors should turn into failures, i.e. assertions that are not true. -

When we have a suite of failing tests, software is written with the goal of making all the tests pass.

-

Once all the tests pass, TDD methodology dictates that coding should stop because if the test suite is adequate (and free of errors) we have now demonstrated that the software is complete and correct. Part of the TDD philosophy is that if more functionality is required, one or more further tests should be written to specify and demonstrate the need for more (or different) code.

-

There is one more important stage in test-driven development, namely refactoring. This is the process of restructuring, simplifying or otherwise improving code while maintaining its functionality (i.e., keeping the tests passing). It is widely accepted that complexity is one of the biggest problems in software, and simplifying code as soon as the tests pass allows us to attempt to reduce complexity as early as possible. It is a recognition of the fact that the first successful implementation of some feature will typically not be the most direct and straightforward.

The philosophy of writing tests before the code they are designed to validate leads some to suggest that the second "D" in TDD (development) should really stand for design (e.g. Allen Houlob3). This idea grows out of the observation that with TDD, testing is moved from its traditional place towards the end of the development cycle to a much earlier and more prominent position where specification and design would traditionally occur.

TDD advocates tend to argue for making tests very quick to run (preferably mere seconds for the entire suite) so that there is no impediment to running them frequently during development, not just between each code commit,4 but multiple times during the development of each function.

Another important idea is that of regression testing. As noted previously a regression is a defect that is introduced by a modification to the software. A natural consequence of maintaining and using a comprehensive suite of tests is that when such a regressions occur, they should be detected almost immediately. When a bug does slip through without triggering a test failure, the TDD philosophy dictates that before it is fixed, one or more failing tests should be added to demonstrate the incorrect behaviour. By definition, when the bug is fixed, these new tests will pass unless they themselves contain errors.

Common Variations, Flavours and Implementations

A distinction is often made between unit tests and system tests (also known as integration tests). Unit tests are supposed to test low-level software units (such individual functions, methods or classes). There is often a particular focus on these low-level unit tests, partly because these can often be made to run very quickly, and partly (I think) because there is an implicit belief or assumption that if each individual component is well tested, the whole system built out of those components is likely to be reliable. (Personally, I think this is a poor assumption.)

In contrast, system tests and integration tests exercise many parts of the system, often completing larger, more realistic tasks, and more often interfacing with external systems. Such tests are often slower and it can be hard to avoid their having side effects (such as updating entries in databases).

The distinction, however, between the different levels is somewhat subjective, and some organizations give more equal or greater weight to higher level tests. This will be an interesting issue as we consider how to move towards test-driven data analysis.

Another practice popular within some TDD schools is that of mocking. The general idea of mocking is to replace some functionality (such as a database lookup, a URL fetch, a disk write, a trigger event or a function call) with a simpler function call or a static value. This is done for two main reasons. First, if the mocked functionality is expensive, or has side effects, test code can often be made much faster and side-effect free if its execution is bypassed. Secondly, mocking allows a test to focus on the correctness of a particular aspect of functionality, without any dependence on the external part of the system being mocked out.

Other TDD practitioners are less keen on mocking, feeling that it leads to less complete and less realistic testing, and raises the risk of missing some kinds of defects. (Those who favour mocking also tend to place a strong emphasis on unit testing, and to argue that more expensive, non-mocked tests should form part of integration testing, rather than part of the more frequently run core unit test suite.)

While no special software is strictly required in order to follow a

broadly test-driven approach to development, good tools are extremely

helpful. There are standard libraries that support of this for most

mainstream programming languages. The xUnit family of test software

(e.g. CUnit for C, jUnit for Java, unittest for Python), uses

a common architecture designed by Kent Beck.2 It is worth

noting that the rUnit package is such a system for use with the

popular data analysis package R.

Example

As an example, the following Python code tests a

function f, as described above, using Python's

unittest module.

Even if you are completely unfamilar with Python, you will be able

to see the six crucial lines that implement exactly the six

tests described in pseudo-code above, in this case through four separate

test methods.

import sys

import unittest

def f(a, b):

return a + b

class TestAddFunction(unittest.TestCase):

def testNonNegatives(self):

self.assertEqual(f(0, 0), 0)

self.assertEqual(f(1, 7), 8)

def testNegatives(self):

self.assertEqual(f(-2, 17), 15)

self.assertEqual(f(-3, +3), 0)

def testStringInput(self):

self.assertRaises(TypeError, f, "a", 7)

def testOverflow(self):

self.assertEqual(f(sys.float_info.max, sys.float_info.max),

float('inf'))

if __name__ == '__main__':

unittest.main()

If this code is run, including the function definition for f,

the output is as follows:

$ python add_function.py

....

----------------------------------------------------------------------

Ran 4 tests in 0.000s

OK

Here, each dot signifies a passing test.

However, if this is run without defining f, the result is the following

output:

$ python add_function.py

EEEE

======================================================================

ERROR: testNegatives (__main__.TestAddFunction)

----------------------------------------------------------------------

Traceback (most recent call last):

File "add_function.py", line 13, in testNegatives

self.assertEqual(f(-2, 17), 15)

NameError: global name 'f' is not defined

======================================================================

ERROR: testNonNegatives (__main__.TestAddFunction)

----------------------------------------------------------------------

Traceback (most recent call last):

File "add_function.py", line 9, in testNonNegatives

self.assertEqual(f(0, 0), 0)

NameError: global name 'f' is not defined

======================================================================

ERROR: testOverflow (__main__.TestAddFunction)

----------------------------------------------------------------------

Traceback (most recent call last):

File "add_function.py", line 20, in testOverflow

self.assertEqual(f(sys.float_info.max, sys.float_info.max),

NameError: global name 'f' is not defined

======================================================================

ERROR: testStringInput (__main__.TestAddFunction)

----------------------------------------------------------------------

Traceback (most recent call last):

File "add_function.py", line 17, in testStringInput

self.assertRaises(TypeError, f, "a", 7)

NameError: global name 'f' is not defined

----------------------------------------------------------------------

Ran 4 tests in 0.000s

FAILED (errors=4)

Here the four E's at the top of the output represent errors when running

the tests. If a dummy definition of f is provided, such as:

def f(a, b):

return 0

the tests will fail, producing F, rather than raising the errors that result in E's.

Benefits of Test-Driven Development

Correctness. The most obvious reason to adopt test-driven development is the pursuit of higher software quality. TDD proponents certainly feel that there is considerable benefit to maintaining a broad and rich set of tests that can be run automatically. There is rather more debate about how important it is to write the tests strictly before the code it is designed to test. I would say that to qualify as test-driven development, the tests should be produced no later than immediately after each piece of functionality is implemented, but purists would take a stricter view.

Regression detection. The second benefit of TDD is in the detection of regressions, i.e. failures of code in areas that previously ran successfully. In practice, regression testing is even more powerful than it sounds because not only can many different failure modes be detected by a single test, but experience shows that there are often areas of code that are susceptible to similar breakages from many different causes and disturbances. (This can be seen as a rare case of combinatorial explosion working to our advantage: there are many ways to get code wrong, and far fewer to get it right, so a single test can catch many different potential failures.)

Specification, Design and Documentation. One of the stronger reasons for writing tests before the functions they are designed to verify is that the test code then forms a concrete specification. In order even to write the test, a certain degree of clarity has to be brought to the question of precisely what the function that is being written is supposed to do. This is the key insight that leads towards the idea of TDD as test-driven design over test-driven development. A useful side effect of the test suite is that it also forms a precise and practical form of documentation as to exactly how the code can be used successfully, and one that, by definition, has to be kept up to date—a perenial problem for documentation.

Refactoring. The benefits listed so far are relatively unsurprising. The fourth is more profound. In many software projects, particularly large and complex ones, once the software is deemed to be working acceptably well, some areas of the code come to be regarded as too dangerous to modify, even when problems are discovered. Developers (and managers) who know how much pain and effort was required to make something work (or more-or-less work) become fearful that the risks associated with fixing or upgrading code are simply too high. In this way, code becomes brittle and neglected and thus essentially unmaintainable.

In my view, the single biggest benefit of test-driven development is that it goes a long way to eliminating this syndrome, allowing us to re-write, simplify and extend code safely, confident in the knowledge that if the tests continue to function, it is unlikely that anything very bad has happened to the code. The recommended practice of refactoring code as soon as the tests pass is one aspect of this, but the larger benefit of maintaining comprehensive set of tests is that such refactoring can be performed at any time.

These are just the most important and widely recognized benefits of TDD. Additional benefits include the ability to check that code is working correctly on new machines or systems, or in any other new context, providing a useful baseline of performance (if timed and recorded) and providing an extremely powerful resource if code needs to be ported or reimplemented.

-

A software regression is a bug in a later version of software that was not present in a previous version of the software. It contrasts with bugs that may always have been present but were not detected. ↩

-

Kent Beck, Test-Driven Development, Addison Wesley (Vaseem) 2003. ↩

-

Allen Houlob, Test-Driven Design, Dr. Dobbs Journal, May 5th 2014. https://www.drdobbs.com/architecture-and-design/test-driven-design/240168102. ↩

-

Most non-trivial software development uses a so-called revision control system to provide a comprehensive history of versions of the code. Developers normally run code frequently, and typically commit changes to the revision-controlled repository somewhat less frequently (though still, perhaps, many times a day). With TDD, the tests form an integral part of the code base, and it is common good practice to require that code is only committed when the tests pass. Sometimes this requirement is merely a rule or convention, while in other cases systems are set up in such a way as to enable code to be committed only when all of its associated tests pass. ↩